|

Professor Jonas Unger is leading the

Computer Graphics and Image Processing Group and the Visual Computing Laboratory at the

Division for Media and Information Technology (MIT) at the Department of Science and Technology (ITN). Contact (full address): Office: Kopparhammaren G520 Phone: +46 11 363436 E-mail: This email address is being protected from spam bots, you need Javascript enabled to view it Ongoing:

|

||||||||||||

Recent publications:

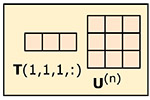

A Unified Framework for Compression and Compressed Sensing of Light Fields and Light Field Videos

A Unified Framework for Compression and Compressed Sensing of Light Fields and Light Field Videos

(ACM ToG 2019, SIGGRAPH) |

Light Field Video Compression and Real Time Rendering

Light Field Video Compression and Real Time Rendering

(Pacific Graphics 2019) |

Single-frame Regularization for Temporally Stable CNNs

Single-frame Regularization for Temporally Stable CNNs

(CVPR 2019 - preprint) |

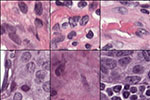

A closer look at domain shift for deep learning in histopathology

A closer look at domain shift for deep learning in histopathology

(MICCAI 2019 Computational Pathology Workshop COMPAY) |

Compact and intuitive data-driven BRDF models

Compact and intuitive data-driven BRDF models

(The Visual Computer 2019) |

GPU Accelerated Sparse Representation of Light Fields

GPU Accelerated Sparse Representation of Light Fields

(VISAPP 2019) |

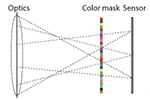

Multi-Shot Single Sensor Light Field Camera Using a Color Coded Mask

Multi-Shot Single Sensor Light Field Camera Using a Color Coded Mask

(EUSIPCO 2018) |

HDR image reconstruction from a single exposure using deep CNNs

HDR image reconstruction from a single exposure using deep CNNs

(SIGGRAPH Asia 2017) |

Dowload open source code and open data from our projects here:

Synscapes: A Photorealistic Synthetic Dataset for Street Scene Parsing

Synscapes: A Photorealistic Synthetic Dataset for Street Scene Parsing

|

HDR image reconstruction from a single exposure using deep CNNs

HDR image reconstruction from a single exposure using deep CNNs

(SIGGRAPH Asia 2017) |

www.lumahdrv.org (Perceptual encoding of HDR video - open source) |

http://www.hdrv.org/vps/ (Capturing reality for computer graphics aplications, Siggraph Asia '15 course material) |

http://www.dependsworkflow.net (Depends: Workflow Management Software for Visual Effects Production) |

www.hdrv.org (LiU HDRv Repository - HDR-video and Image based lighting) |

Research:

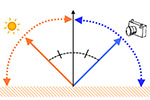

My research interests lie at the intersection of computer graphics/vision and image processing with applications in e.g. high dynamic range (HDR) imaging, light field imaging, tone mapping, appearance capture, material modeling photo-realistic image syntehsis, and medical/scientific visualization of volumetric 3D data.Scene capture for photo-realistic image synthesis and augmented reality

Related publications:

Capturing reality for computer graphics applications (SIGGRAPH Asia 2015 courses) |

Photorealistic rendering of mixed reality scenes (Eurographics 2015 STAR) |

Depends: Workflow Management Software for Visual Effects Production (DigiPro 2014) |

Spatially Varying Image Based Lighting using HDR-video (C&G 2013) |

Temporally and Spatially Varying Image Based Lighting using HDR-video (EUSIPCO 2013) |

Next Generation Image Based Lighting (SIGGRAPH 2011 Talk) |

Free form incident light fields (EGSR 2008) |

Spatially Varying Image Based Lighting by Light Probe Sequences, Capture, Processing and Rendering (Visual Computer 2007) |

Capturing and rendering with incident light fields (EGSR 2003) |

LiU HDRv Repository - HDR-video and Image based lighting www.hdrv.org |

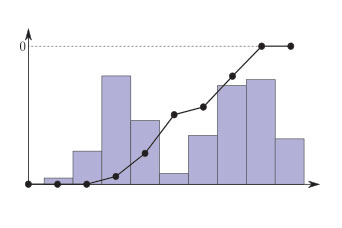

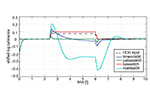

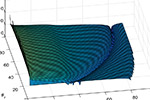

Image reconstruction and HDR-video capture

In this project, we are developing new cameras and algorithms for high dynamic range (HDR) video capture. The main theoretical result is a new framework for statistically based image reconstruction using input from multiple image sensors with different characteristics, e.g. different resolution, filters, or spectral response. Based on our framework, we have developed a number of algorithms for different cameras and sensor setups. One of the key contributions is that our algorithms perform the different steps in the "traditional" imaging pipeline (demosaicing, resampling, denoising, and image reconstruction) in a single, unified step instead of as a sequence of operations. This makes them both more accurate and easy to parallelize. The output pixels are reconstructed as a Maximum Likelihood estimate taking into account the heterogeneous sensor noise using adaptive filters. Based on our algorithms, we have developed multi-sensor HDR-video cameras, sequential expousre HDR-video cameras, and methods for HDR-video capture using off-the-shelf consumer cameras.

Related publications:

HDR image reconstruction from a single exposure using deep CNNs

HDR image reconstruction from a single exposure using deep CNNs

(SIGGRAPH Asia 2017) |

High Dynamic Range Video - From acquisition to display and applications

High Dynamic Range Video - From acquisition to display and applications

(Academic Press 2016) |

The HDR-Video Pipeline

The HDR-Video Pipeline

(Eurographics 2016) |

Adaptive dualISO HDR-reconstruction

Adaptive dualISO HDR-reconstruction

(EURASIP JIVP 2015) |

A Unified Framework for Multi-Sensor HDR Video Reconstruction (Signal Processing : Image Communications 2014) |

HDR reconstruction for alternating gain (ISO) sensor readout (Eurographcis 2014) |

Unified HDR-reconstruction from raw CFA data (ICCP 2013) |

High Dynamic Range Video for Photometric Measurement of Illumination (Electronic Imaging 2007) |

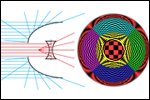

An Optical System for Single-Image Environment Maps (SIGGRAPH 2007 Poster) |

A real-time light probe (Eurographcis '04 short paper) |

CENIIT - High Dynamic Range Video with Applications |

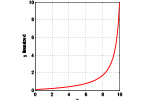

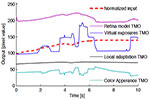

Tone mapping and HDR-video compression

Related publications:

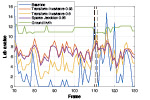

A comparative review of tone-mapping algorithms for high dynamic range video

A comparative review of tone-mapping algorithms for high dynamic range video

(Eurographics 2017) |

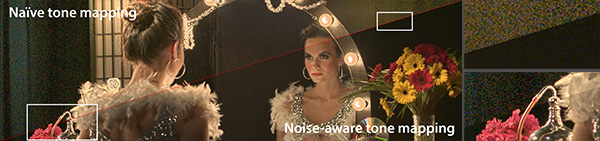

Real-time noise-aware tone mapping and its use in luminance retargeting

Real-time noise-aware tone mapping and its use in luminance retargeting

(ICIP 2016) |

A High Dynamic Range Video Codec Optimized by Large Scale Testing

A High Dynamic Range Video Codec Optimized by Large Scale Testing

(ICIP 2016) |

Luma HDRv: an open source HDR video codec optimized by large-scale testing

Luma HDRv: an open source HDR video codec optimized by large-scale testing

(SIGGRAPH 2016 Talk) |

|

Real-time noise-aware tone mapping (SIGGRAPH Asia 2015) |

Perceptually based parameter adjustments for video processing operations. (SIGGRAPH 2014 Talk) |

Evaluation of Tone Mapping Operators for HDR Video (Computer Graphics Forum 2013) |

Survey and Evaluation of Tone Mapping Operators for HDR Video (SIGGRAPH 2013 Talk) |

|

Perceptual encoding of HDR video (open source) www.lumahdrv.org |

High Dynamic Range Video - From acquisition to display and applications

High Dynamic Range Video - From acquisition to display and applications

(Academic Press 2016) |

Light field displays and automultiscopic viewing

Related publications:

Time-offset Conversations on an Automultiscopic

Time-offset Conversations on an AutomultiscopicProjector Array (CVPR - CCD 2016) |

An Auto-Multiscopic Projector Array for Interactive Digital Humans (SIGGRAPH 2015 E-Tech) |

Creating a life-sized automultiscopic Morgan Spurlock for CNN's ``Inside Man'' (SIGGRAPH 2014 Talk) |

Light field imaging

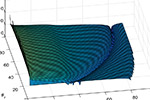

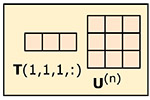

(Learning based sparse representations for visual data compression and imaging) In this project, we are developing new representations, basis functions, for compression and efficient representation of visual data such as light fields, video sequences, and images. Previous methods have used analytical basis functions such as spherical harmonics, Fourier bases, and wavelets to represent visual data in a compact form. In this project we take a learning based approach, and train basis functions to adapt to the input data. Our learning based basis representations admit a very high sparsity, which enables data compression with real-time reconstruction and the application of compressed sensing methods for image and light field reconstruction. In our work, we have developed methods for compression of surface light fields encoding the full, pre-computed global illumination solution in complex scenes, for real-time product visualization using our GPU implementation. We have also developed compressed sensing algorithms, which for example allow for high quality light field rendering/capture using only 4% of the original data.Related publications:

GPU Accelerated Sparse Representation of Light Fields

GPU Accelerated Sparse Representation of Light Fields

(VISAPP 2019) |

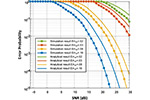

OMP-based DOA estimation performance analysis

OMP-based DOA estimation performance analysis

(Digital Signal Processing 2018) |

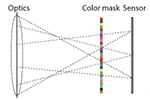

Multi-Shot Single Sensor Light Field Camera Using a Color Coded Mask

Multi-Shot Single Sensor Light Field Camera Using a Color Coded Mask

(EUSIPCO 2018) |

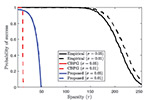

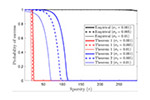

A Performance Guarantee for Orthogonal Matching Pursuit Using Mutual Coherence

A Performance Guarantee for Orthogonal Matching Pursuit Using Mutual Coherence

(Circuits, Systems and Signal Processing 2018) |

On Probability of Support Recovery for Orthogonal Matching Pursuit Using Mutual Coherence

On Probability of Support Recovery for Orthogonal Matching Pursuit Using Mutual Coherence

(IEEE Signal processing letters 2017) |

On local image completion using an ensemble of dictionaries

On local image completion using an ensemble of dictionaries

(ICIP 2016) |

Compressive image reconstruction in reduced union of sub-spaces (Eurographics 2015) |

Learning Based Compression of Surface Light Fields for Real-time Rendering of

Global Illumination Scenes

Learning Based Compression of Surface Light Fields for Real-time Rendering of

Global Illumination Scenes

(SIGGRAPH Asia '13 technical brief) |

Learning Based Compression for Real-Time Rendering of Surface Light Fields (SIGGRAPH 2013 poster) |

Geometry Independent Surface Light Fields for Real Time Rendering of Precomputed Global Illumination (SIGRAD 2011) |

Material capture and modeling

Material properties such as reflectance, color and textures play a key role in the visual appearance of objects. In photo-realistic and physically based image synthesis, we simulate how light interacts with the surfaces and the materials in a virtual scene. In this project, we are developing methods and equipment for measuring material properties on everyday surfaces, and mathematical models that can accurately describe these properties using models that can be efficiently used in photo-realistic image synthesis.Related publications:

Compact and intuitive data-driven BRDF models

Compact and intuitive data-driven BRDF models

(The Visual Computer 2019) |

Efficient BRDF Sampling Using Projected Deviation Vector Parameterization

Efficient BRDF Sampling Using Projected Deviation Vector Parameterization

(ICCV 2017) |

Differential appearance editing for measured BRDFs

Differential appearance editing for measured BRDFs

(SIGGRAPH 2016 Talk) |

S(wi)ss: A flexible and robust sub-surface scattering shader. (SIGRAD 2014) |

|

BRDF Models for Accurate and Efficient Rendering of Glossy Surfaces (ACM TOG, 2012) |

A versatile material reflectance measurement system for use in production (SIGRAD 2011) |

Performance relighting and reflectance transformation with time-multiplexed illumination (SIGGRAPH 2005) |

Image synthesis, GPU techniques, efficient rendering and volume visualization

Efficient processing and rendering algorithms are important aspects in computer graphics and computational imaging. Most of our algorithms are designed to allow for parallel computations and efficient GPU implementations. In the projects described below, we have developed algorithms for real-time ray-tracing, interactive visualization of volumetric 3D data from the medical domain, and 3D grids of spherical harmonics basis functions for efficient storage and representation of spatially varying real world lighting.Related publications:

Contact Information:

| Address: |

Jonas Unger Department of Science and Technology Linköping University SE-601 74 Norrköping Sweden |

|

| Office: |

Kopparhammaren G520 |

|

| Email: |

This email address is being protected from spam bots, you need Javascript enabled to view it

|

|

| Phone: | +46 (0)11 363436 |

Archive:

| 2019-10-05: | Invited presentation on "Synthetic Data for Visual Maschine Learning" at the Autonomous Driving workshop held at ICCV 2019. |

| 2019-09-10: | Our paper "A closer look at domain shift for deep learning in histopathology" has been accepted to the MICCAI 2019 Workshop COMPAY. |

| 2019-07-20: | Our paper: "A Unified Framework for Compression and Compressed Sensing of Light Fields and Light Field Videos" is presented at SIGGRAPH 2019. |

| 2019-06-04: | We are organizing the Scandinavian Conference on Image Analysis (SCIA) 2019 and the Swedish Symposium on Deep Learning (SSDL 2019) in Norrköping June 11-13. |

| 2019-05-20: | Invited presentation at the CLIM (Computational Imaging with Novel Image Modalities) workshop held at INRIA in Rennes, France, May 27-28. | -

| 2019-05-10: | Our paper, Compact and intuitive data-driven BRDF models, has been accepted to The Visual Computer. |

| 2019-03-20: | Our paper "A Unified Framework for Compression and Compressed Sensing of Light Fields and Light Field Videos" has been accepted to ACM Transactions on Graphics and been invited for presentation at ACM SIGGRAPH 2019. |

| 2019-03-15: | Our paper "Single-frame Regularization for Temporally Stable CNNs" has been accepted to CVPR 2019, see the arXiv preprint. |

| 2019-01-01: | I have a new position at Linköping University as full professor in computer graphics. |

| 2018-12-14: | Ehsan Miandji successfully defended his PhD thesis titled: Sparse Representation of Visual Data for Compression and Compressed Sensing. |

| 2018-10-22: | Invited presentation at the ELLIIT Annual Workshop, 2018. |

| 2018-08-05: | I have been invited to present 7DLabs Inc. and our work in synthetic data generation for machine learning in the Autonomous Driving Simulation and Visualization BOF at SIGGRAPH 2018. |

| 2018-06-06: | Gabriel Eilertsen defended PhD thesis: The High Dynamic Range Imaging Pipeline - Tone Mapping, Distribution, and Single Exposure Reconstruction.. |

| 2018-06-26: | WASP-AI will fund our new industrial PhD student with SECTRA directed towards digital pathology. |

| 2018-06-19: | Visual Sweden has granted 3 years of funding for our new initiative Center for Augmented Intelligence (CAI). |

| 2018-05-27: | Our paper Multi-Shot Single Sensor Light Field Camera Using a Color Coded Mask has been accepted to EUSIPCO 2018. |

| 2018-05-27: | Our paper OMP-Based DOA Estimation Performance Analysis has been accepted for publication in Elsevier Digital Signal Processing. |

| 2018-05-15: | I'm honored to be on the committee for Alessandro Dal Corso’s PhD defence at DTU Compute, VRST 2017. |

| 2018-03-29: | I will be conference chair for SCIA 2019 to be held in Norrköping June 10-11 2019. |

| 2018-01-23: | I'm the recipient of Swedbanks research stipend, VRST 2017. |

| 2017-10-18: | Myself and Oliver Staadt are chairing the scientific program at the 23rd ACM Symposium on Virtual Reality Software and Technology, VRST 2017. |

| 2017-10-18: | Vinnova funds our new project in which we are generating synthetic training data for deep learning applications in automotive applications. |

| 2017-10-03: | Our paper: HDR image reconstruction from a single exposure using deep CNNs has been accepted to ACM SIGGRAPH Asia 2017. |

| 2017-09-14: | Our paper On Probability of Support Recovery for Orthogonal Matching Pursuit Using Mutual Coherence has been accepted to IEEE Signal Processing Letters. |

| 2017-08-31: | Invited speaker at the jubilee symposium Materials and Technology for a Digital Future for the Knut and Alice Wallenberg Foundation's 100 years anniversary, Sept. 13. |

| 2017-08-30: | Our paper: Efficient BRDF Sampling Using Projected Deviation Vector Parameterization by Tanaboon Tongbuasirilai, Murat Kurt, and Jonas Unger has been accepted to the ICCV workshop BxDF4CV. |

| 2017-07-30: | Our paper: A Performance Guarantee for Orthogonal Matching Pursuit Using Mutual Coherence has been accepted to Springer Circuits, Systems, and Signal Processing. |

| 2017-05-10: | Our paper BriefMatch: Dense binary feature matching for real-time optical flow estimation has been accepted to SCIA 2017. |

| 2017-05-14: | Presentation of our project on image synthesis for machine learning at SSBA 2017. |

| 2017-01-20: | Our STAR - A comparative review of tone-mapping algorithms for high dynamic range video has been accepted for publication at Eurographics 2017. |

| 2016-10-01: | Vinnova is funding our new project Surgeons View. |

| 2016-07-24: | Presenting our open source HDR video compression API LumaHDRv at SIGGRAPH 2016 in Anaheim, US. |

| 2016-05-25: | We have three papers accepted to ICIP 2016. |

| 2016-05-15: | SIGRAD 2016 - We are giving two research overview presentations on Real-time tone mapping and compressive image reconstruction. |

| 2016-05-12: | Our paper Time-offset Conversations on a Life-Sized Automultiscopic Projector Array has been accepted for publication at the CVPR workshop Computational Cameras and Displays 2016. |

| 2016-04-21: | Norrköpings fond för forskning och utveckling will fund our new project Digitala verktyg för en levande historia, which will run 2016 - 2017. |

| 2016-04-21: | We have two talks on BRDF editing and HDR-video encoding accepted to SIGGRAPH 2016. |

| 2016-04-14: | Our new book on HDR-video is out. We have contributed with two chapters. |

| 2016-03-14: | Our Eurographics 2016 tutorial "The HDR-video pipeline" has been accepted. |

| 2016-02-16: | Invited presentation at the LiU - Ericsson collaboration agreement signing. |

| 2016-01-11: | A new PhD student, Apostolia Tsirikoglou, has joined our group. |

| 2015-12-11: | Our paper: Adaptive dualISO reconstruction is now available online. |

| 2015-12-04: | Joel Kronander is defending his PhD thesis: Physically based rendering of Synthetic Objects in real environments |

| 2015-11-18: | Our open source HDR-video codec Luma HDRv is now on-line. |

| 2015-11-14: | Our paper: Adaptive dualISO HDR-reconstruction, has been accepted to EURASIP Journal on Image and Video Processing |

| 2015-11-08: | Our Science Council (VR)project proposal on new Monte Carlo algorithms for images synthesis was accepted! |

| 2015-11-08: | Check out our Siggraph Asia '15 course: Capturing reality for computer graphics application |

| 2015-11-07: | Joel Kronander is presenting our Siggraph Asia '15 technical brief: Pseudo-marginal Metropolis Light Transport |

| 2015-09-18: | Our paper Real-time noise-aware tone mapping is accepted to SIGGRAPH Asia 2015. |

| 2015-08-10: | Check out the automultiscopic display at Siggraph 2015 E-Tech! |

| 2015-04-21: | We are building the imaging pipepline for the KTH MIST satellite |

| 2015-03-20: | STAR - Photorealistic rendering of mixed reality scenes accepted to Eurographics 2015 |

| 2015-01-28: | Paper - Compressive Image Reconstruction in Reduced Union of Subspaces accepted to Eurographics 2015 |

| 2014-11-07: | Presentation of our appearence capture project at Stadshuset, Norrköping |

| 2014-10-21: | Invited speaker at Populärvetenskapliga Veckan LiU. |

|

Jonas Unger 2019 |