IEEE International Conference on Computational Photography (ICCP) 2013

Joel Kronander, Stefan Gustavson, Gerhard Bonnet, Jonas Unger

Media and Information Technology, Linköping University, Sweden

and

SpheronVR AG, Germany

Abstract:

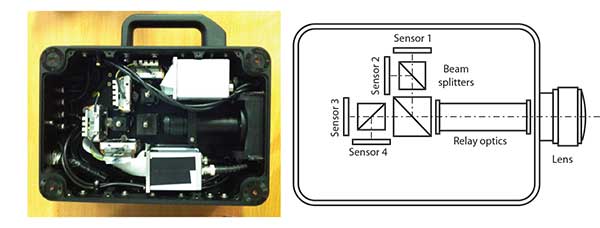

HDR reconstruction from multiple exposures poses several challenges. Previous HDR reconstruction techniques have considered debayering, denoising, resampling (alignment) and exposure fusion in several steps. We instead present a unifying approach, performing HDR assembly directly from raw sensor data in a single processing operation. Our algorithm includes a spatially adaptive HDR reconstruction based on fitting local polynomial approximations to observed sensor data, using a localized likelihood approach incorporating spatially varying sensor noise. We also present a realistic camera noise model adapted to HDR video. The method allows reconstruction to an arbitrary resolution and output mapping. We present an implementation in CUDA and show real-time performance for an experimental 4 Mpixel multi-sensor HDR video system. We further show that our algorithm has clear advantages over state-of-the-art methods, both in terms of flexibility and reconstruction quality.

Keywords: High dynamic range imaging, HDR-video, local polynomial approximation

Documents:

Paper preprint: Unified HDR-Reconstruction from raw CFA Data (.pdf)

Acknowledgements:

We would like to thank Per Larsson for the construction and setup of camera hardware and invaluable help in the lab, Anders Ynnerman for insightful discussions and proof reading of the manuscript, and the anonymous reviewers for helping us improving the paper. This work was funded by the Swedish Foundation for Strategic Research through grant IIS11-0081, Linköping University Center for Industrial Information Technology (CENIIT), and the Swedish Research Council

|

Jonas Unger 2019 |